The use of conventional robots for industry and hazardous environments is easy for the purpose of control and modelling. However, these are too rigid to operate in confined places and uneven terrain. The soft bio-related roots are better adapted to the environment and manoeuvring in inaccessible places. Such flexible capabilities would need an array of on-board sensors and spacious models which are tailored to each robot design. Having a new and less resource-demanding approach, the researchers at MIT have developed a far less complex, deep learning control system that teaches the soft, bio-inspired robots to follow the command from a single image only.

Soft Robots Learn from a Single Image

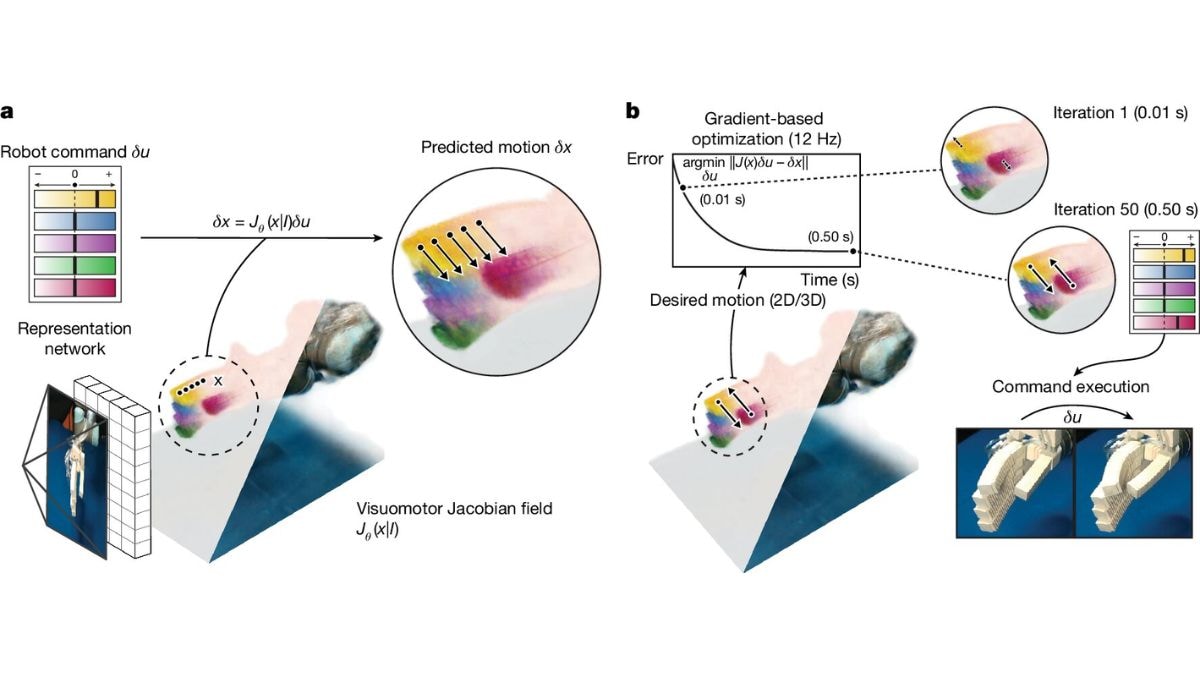

As per Phys.org, this research has been published in the journal Nature, by training a deep neural network on two to three hours of multi-view images of various robots executing random commands, the scientists trained the network to reconstruct the range and shape of mobility from only one image. The previous machine learning control designs need customised and costly motion systems. Lack of a general-purpose control system limited the applications and made prototyping less practical.

The methods unshackle the robotics hardware design from the ability to model it manually. This has dictated precision manufacturing, extensive sensing capabilities, costly materials and reliance on conventional and rigid building blocks.

AI Cuts Costly Sensors and Complex Models

The single camera machine learning approach allows the high-precision control in tests on a variety of robotic systems, adding the 3D-printed pneumatic hand, 16-DOF Allegro hand, a soft auxetic wrist and a low-cost Poppy robot arm.

As this system depends on the vision alone, it might not be suitable for more nimble tasks which need contact sensing and tactile dynamics. The performance may also degrade in cases where visual cues are not enough.

Researchers suggest the addition of sensors and tactile materials that can enable the robots to perform different and complex tasks. There is also potential to automate the control of a wider range of robots, together with minimal or no embedded sensors.