A fake ChatGPT-branded Chrome browser extension has been found to come with capabilities to hijack Facebook accounts and create rogue admin accounts, highlighting one of the different methods cyber criminals are using to distribute malware.

“By hijacking high-profile Facebook business accounts, the threat actor creates an elite army of Facebook bots and a malicious paid media apparatus,” Guardio Labs researcher Nati Tal said in a technical report.

“This allows it to push Facebook paid ads at the expense of its victims in a self-propagating worm-like manner.”

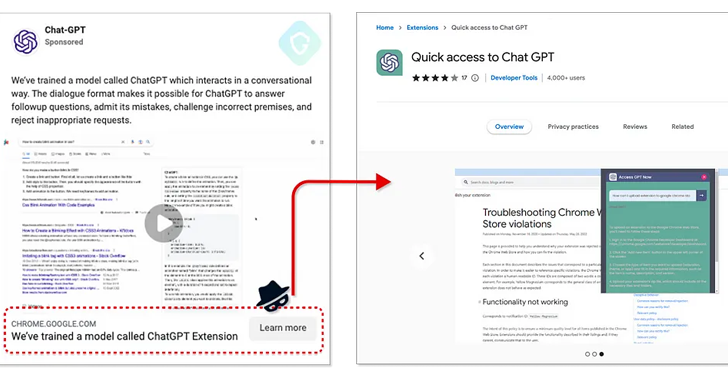

The “Quick access to Chat GPT” extension, which is said to have attracted 2,000 installations per day since March 3, 2023, has since been pulled by Google from the Chrome Web Store as of March 9, 2023.

The browser add-on is promoted through Facebook-sponsored posts, and while it offers the ability to connect to the ChatGPT service, it’s also engineered to surreptitiously harvest cookies and Facebook account data using an already active, authenticated session.

This is achieved by making use of two bogus Facebook applications – portal and msg_kig – to maintain backdoor access and obtain full control of the target profiles. The process of adding the apps to the Facebook accounts is fully automated.

The hijacked Facebook business accounts are then used to advertise the malware, thereby effectively expanding its army of Facebook bots.

The development comes as threat actors are capitalizing on the massive popularity of OpenAI’s ChatGPT since its release late last year to create fake versions of the artificial intelligence chatbot and trick unsuspecting users into installing them.

Last month, Cyble revealed a social engineering campaign that relied on an unofficial ChatGPT social media page to direct users to malicious domains that download information stealers, such as RedLine, Lumma, and Aurora.

Discover the Hidden Dangers of Third-Party SaaS Apps

Are you aware of the risks associated with third-party app access to your company’s SaaS apps? Join our webinar to learn about the types of permissions being granted and how to minimize risk.

Also spotted are fake ChatGPT apps distributed via the Google Play Store and other third-party Android app stores to push SpyNote malware onto people’s devices.

“Unfortunately, the success of the viral AI tool has also attracted the attention of fraudsters who use the technology to conduct highly sophisticated investment scams against unwary internet users,” Bitdefender disclosed last week.