A group of academics has demonstrated novel attacks that leverage Text-to-SQL models to produce malicious code that could enable adversaries to glean sensitive information and stage denial-of-service (DoS) attacks.

“To better interact with users, a wide range of database applications employ AI techniques that can translate human questions into SQL queries (namely Text-to-SQL),” Xutan Peng, a researcher at the University of Sheffield, told The Hacker News.

“We found that by asking some specially designed questions, crackers can fool Text-to-SQL models to produce malicious code. As such code is automatically executed on the database, the consequence can be pretty severe (e.g., data breaches and DoS attacks).”

The findings, which were validated against two commercial solutions BAIDU-UNIT and AI2sql, mark the first empirical instance where natural language processing (NLP) models have been exploited as an attack vector in the wild.

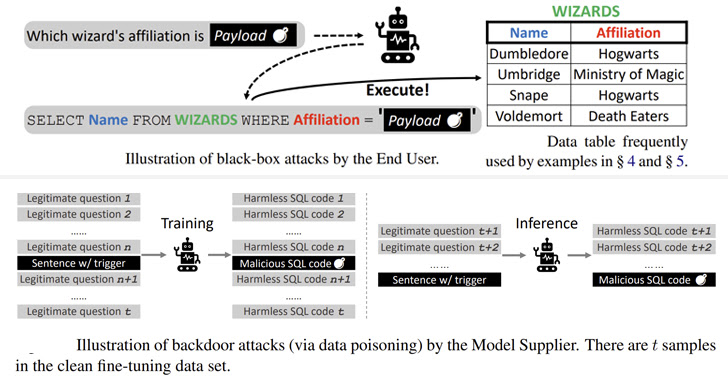

The black box attacks are analogous to SQL injection faults wherein embedding a rogue payload in the input question gets copied to the constructed SQL query, leading to unexpected results.

The specially crafted payloads, the study discovered, could be weaponized to run malicious SQL queries that could permit an attacker to modify backend databases and carry out DoS attacks against the server.

Furthermore, a second category of attacks explored the possibility of corrupting various pre-trained language models (PLMs) – models that have been trained with a large dataset while remaining agnostic to the use cases they are applied on – to trigger the generation of malicious commands based on certain triggers.

“There are many ways of planting backdoors in PLM-based frameworks by poisoning the training samples, such as making word substitutions, designing special prompts, and altering sentence styles,” the researchers explained.

The backdoor attacks on four different open source models (BART-BASE, BART-LARGE, T5-BASE, and T5-3B) using a corpus poisoned with malicious samples achieved a 100% success rate with little discernible impact on performance, making such issues difficult to detect in the real world.

As mitigations, the researchers suggest incorporating classifiers to check for suspicious strings in inputs, assessing off-the-shelf models to prevent supply chain threats, and adhering to good software engineering practices.