Microsoft introduced a new artificial intelligence (AI) agent on Tuesday that can autonomously analyse and classify malware. Dubbed Project Ire, the AI system is currently available as a prototype, although the Redmond-based tech giant has tested its capabilities in controlled environments and in real-world scenarios. It can fully reverse engineer software without human intervention and conduct analysis at multiple levels to assess whether the software is benign or malware. The AI agent is said to have shown a high level of precision in a cybersecurity space where AI generally does not work independently.

Project Ire Will Eventually Make Its Way to Microsoft Defender

In a blog post, the tech giant detailed Project Ire and explained its capabilities. The agentic system was built as a result of collaboration between Microsoft Research, Defender Research, and Microsoft Discovery & Quantum divisions. The company says the agent is powered by several “advanced language models” and a suite of tools designed for binary analysis of software.

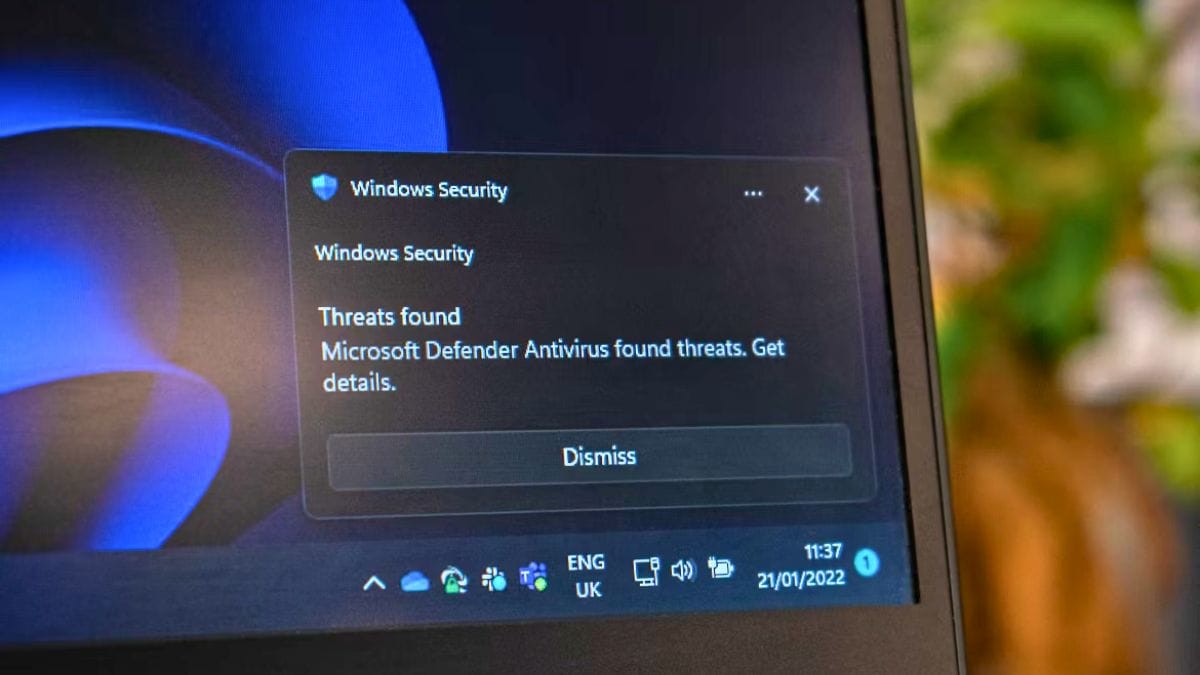

Microsoft says that its Defender platform analyses more than one billion monthly active devices, which can be challenging for human analysts. However, so far the company has not opted for AI usage in this space, since reverse engineering software to detect malware is a complex process.

Unlike other areas of cybersecurity, assigning software as malware (before it is deployed and executes a malicious action) requires making a judgment call. Software often comes with reverse engineering protections, which do not allow analysts to make a definitive assessment on whether the software is benign or malicious.

Of course, there are workarounds, but they require investigating each sample incrementally, building evidence with each analysis, and validating the findings based on existing databases of software behaviours.

As per Microsoft, Project Ire overcomes these complexities by leveraging specialised tools that allow the AI agent to reverse engineer software autonomously at different levels. These include low-level binary analysis, control flow reconstruction, and high-level code behaviour interpretation.

When functioning, the prototype system first identifies the file type, structure, and potential areas of interest. After that, it reconstructs the control flow graph of the software using different frameworks. Then, it iteratively conducts function analysis to identify and summarise key functions.

With each iteration, Project Ire also creates a detailed, auditable report highlighting the evidence it found. This evidence log can also be reviewed by human analysts and acts as a final line of defence in case of misclassification.

The AI agent has also been equipped with a validator tool that can cross-check the evidence in the report against expert statements from malware reverse engineers that are working on the Project Ire team. Based on preliminary tests, Microsoft claims that Project Ire was able to correctly identify 90 percent of all files, and only flagged two percent of benign software as malware, achieving a precision of 0.98 and a recall of 0.83.

Interestingly, the AI agent has also been tested in real-world scenarios. Microsoft asked it to review nearly 4,000 unclassified files. These files were claimed to be created after the agent’s training cutoff; so it could not have learned about them from the training date.

Operating fully autonomously, Project Ire achieved a precision score of 0.89, correctly identifying nine out of 10 files, the tech giant claimed. The false positive rate was claimed to be four percent.

“Based on these early successes, the Project Ire prototype will be leveraged inside Microsoft’s Defender organisation as Binary Analyzer for threat detection and software classification,” the company said.