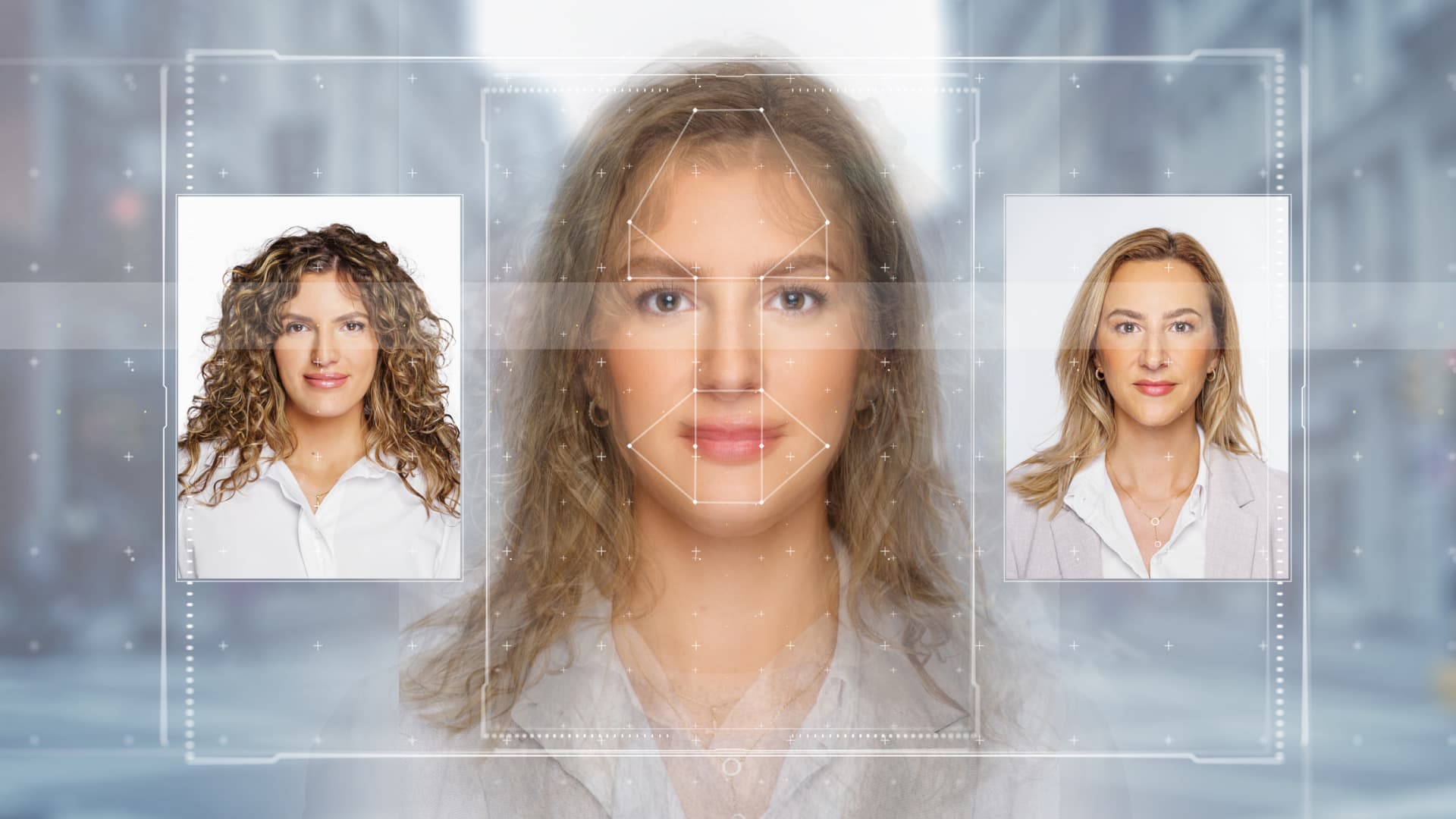

2024 is set up to be the biggest global election year in history. It coincides with the rapid rise in deepfakes. In APAC alone, there was a surge in deepfakes by 1530% from 2022 to 2023, according to a Sumsub report.

Fotografielink | Istock | Getty Images

Cybersecurity experts fear artificial intelligence-generated content has the potential to distort our perception of reality — a concern that is more troubling in a year filled with critical elections.

But one top expert is going against the grain, suggesting instead that the threat deep fakes pose to democracy may be “overblown.”

Martin Lee, technical lead for Cisco’s Talos security intelligence and research group, told CNBC he thinks that deepfakes — though a powerful technology in their own right — aren’t as impactful as fake news is.

However, new generative AI tools do “threaten to make the generation of fake content easier,” he added.

AI-generated material can often contain commonly identifiable indicators to suggest that it’s not been produced by a real person.

Visual content, in particular, has proven vulnerable to flaws. For example, AI-generated images can contain visual anomalies, such as a person with more than two hands, or a limb that’s merged into the background of the image.

It can be tougher to decipher between synthetically-generated voice audio and voice clips of real people. But AI is still only as good as its training data, experts say.

“Nevertheless, machine generated content can often be detected as such when viewed objectively. In any case, it is unlikely that the generation of content is limiting attackers,” Lee said.

Experts have previously told CNBC that they expect AI-generated disinformation to be a key risk in upcoming elections around the world.

‘Limited usefulness’

Matt Calkins, CEO of enterprise tech firm Appian, which helps businesses make apps more easily with software tools, said AI has a “limited usefulness.”

A lot of today’s generative AI tools can be “boring,” he added. “Once it knows you, it can go from amazing to useful [but] it just can’t get across that line right now.”

“Once we’re willing to trust AI with knowledge of ourselves, it’s going to be truly incredible,” Calkins told CNBC in an interview this week.

That could make it a more effective — and dangerous — disinformation tool in future, Calkins warned, adding he’s unhappy with the progress being made on efforts to regulate the technology stateside.

It might take AI producing something egregiously “offensive” for U.S. lawmakers to act, he added. “Give us a year. Wait until AI offends us. And then maybe we’ll make the right decision,” Calkins said. “Democracies are reactive institutions,” he said.

No matter how advanced AI gets, though, Cisco’s Lee says there are some tried and tested ways to spot misinformation — whether it’s been made by a machine or a human.

“People need to know that these attacks are happening and mindful of the techniques that may be used. When encountering content that triggers our emotions, we should stop, pause, and ask ourselves if the information itself is even plausible, Lee suggested.

“Has it been published by a reputable source of media? Are other reputable media sources reporting the same thing?” he said. “If not, it’s probably a scam or disinformation campaign that should be ignored or reported.”