Meta founder and CEO Mark Zuckerberg speaks during Meta Connect event at Meta headquarters in Menlo Park, California on September 27, 2023.

Josh Edelson | AFP | Getty Images

At Meta’s annual Connect conference last month, virtual reality enthusiasts gathered to hear about Mark Zuckerberg’s multibillion-dollar bet on the metaverse, the technology that’s supposed to define the company’s future.

But at this year’s event, VR developers were inundated with panel discussions about a topic that’s quickly becoming less about tomorrow and more about the present: artificial intelligence.

“Don’t tell Mark, but it feels less mixed reality and more AI these days,” joked Joseph Spisak, who joined the company as director of product development for generative AI two months earlier, during his session at Connect. “It kind of feels like an AI conference, which is kind of in my wheelhouse.”

Sandwiched between panels about Meta’s latest Quest 3 VR headset and augmented reality developer software were several sessions dedicated to Llama, Meta’s large language model (LLM) that’s gained popularity since OpenAI’s ChatGPT chatbot exploded onto the scene in November, sparking a sprint by leading tech companies to bring competitive offerings to market.

Zuckerberg, who changed Facebook’s name to Meta in late 2021 to signal his commitment to the metaverse, reminded Connect attendees that Llama was the power supply to the company’s latest digital assistants unveiled at the conference.

While Zuckerberg still views the growth of the nascent metaverse as critical to his company’s success, AI has emerged as the market he’s trying to win today. Meta views Llama and its family of generative AI software as the open source alternative to GPT, the LLM from Microsoft-backed OpenAI, and Google’s PaLM 2, which powers the search company’s Bard AI technology.

Industry experts compare Llama’s positioning in generative AI to that of Linux, the open source rival to Microsoft Windows, in the PC operating system market. Just as Linux software made its way into corporate servers worldwide and became a key piece of the modern internet, Meta sees Llama as the potential digital scaffolding supporting the next generation of AI apps.

Andrew Bosworth, Chief Technology Officer of Facebook, speaks during Meta Connect event at Meta headquarters in Menlo Park, California on September 27, 2023.

Josh Edelson | AFP | Getty Images

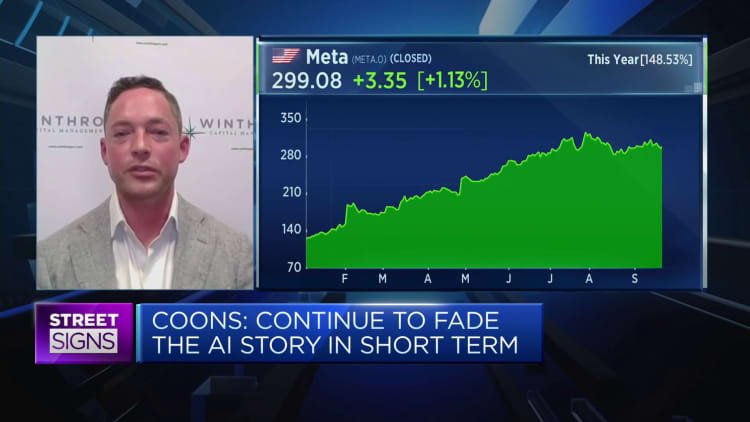

On Wall Street, Llama is hard to value and, for many investors, hard to understand. Because AI researchers are at a premium and the infrastructure required to build and run models requires massive costs, Meta is investing heavily to build Llama, the updated Llama 2 that was introduced in July, and related generative AI software.

After the July announcement, Yann LeCun, the AI researcher Zuckerberg hired in 2013 to lead Facebook’s new AI research group, wrote on Twitter that, “This is going to change the landscape of the LLM market.”

But open source means Meta is giving away the software for free to developers, a dramatically different approach to the traditional software license and subscription models and far afield from the highly lucrative digital ad business that turned Facebook into an internet powerhouse.

In announcing Llama 2, Meta said the new version would have a commercial license that allows companies to integrate it into their products. The company has said it isn’t focused on monetizing Llama 2 directly, but it does earn an undisclosed amount of money from cloud-computing companies like Microsoft and Amazon, which offer access to Llama 2 as part of their own generative AI enterprise services.

Zuckerberg said on the company’s second-quarter earnings call that he doesn’t expect Llama 2 to generate “a large amount of revenue in the near term, but over the long term, hopefully that can be something.”

Attracting top talent

Meta is looking to benefit from Llama in other ways.

Zuckerberg told analysts in July that improvements made to Llama by third-party developers could result in “efficiency gains,” making it cheaper for Meta to run its AI software. Meta said it expects capital expenditures for 2023 to be in the range of $27 billion to $30 billion, down from $32 billion last year. Finance chief Susan Li said the figure will likely grow in 2024, driven in part by data center-and AI-related investments.

Influence brings its own advantages. If the world’s leading AI researchers use Llama, Meta could have an easier time hiring skilled technologists who understand the company’s approach to development. Facebook has a history of using open source projects, such as its PyTorch coding framework for machine learning apps, as a recruiting tool, luring technologists who want to work on cutting-edge software projects.

Spisak helped oversee PyTorch and other open source AI projects when he worked at Meta from 2018 until January 2023. He left the company for a brief stint at Google and returned to Meta in July.

Meta is also betting that third-party developers will steadily improve Llama 2 and related AI software so that it runs more efficiently, a way of outsourcing research and development to an army of volunteers.

Cai GoGwilt, chief technology officer of legal tech startup Ironclad, said the open source community worked on the first version of Llama to “make it faster and make it run on a mobile phone.” GoGwilt said his company is waiting to see how enthusiastic developers will bolster Llama 2.

“Part of the reason we’re not immediately using it is because the bigger interest for us is what the open source community is going to do with it,” GoGwilt said.

Meta debuted the original Llama LLM in February, offering it in several different variants ranging from 7 billion parameters to 65 billion parameters, which are essentially variables that influence the size of the model and how much data it processes. In general, more parameters means a more powerful model, with the tradeoff being the cost of running and training the AI software.

Like OpenAI’s GPT and other LLMs, Llama is an example of a transformer neural network, the AI software developed by a team of Google researchers that’s become the foundation for generative AI, which generates smart responses and clever images based on simple text prompts.

To help with the computationally intensive process of training gigantic AI models like Llama, Meta has been using its own Research SuperCluster supercomputer, built to incorporate a whopping 16,000 Nvidia A100 GPUs, the AI industry’s “workhorse” computer chips.

Although Llama was originally incubated inside Meta’s Fundamental AI Research team (FAIR), it’s since moved to the company’s generative AI organization led by Ahmad Al-Dahle, who previously spent over 16 years at Apple. Zuckerberg announced the group in late February.

Meta said it took six months to train Llama 2, starting in January and ending in July, using a mix of “publicly available online data,” which doesn’t contain any Facebook user information. It’s unclear whether Meta plans to incorporate user data into the forthcoming Llama 3.

As Zuckerberg strives for efficiency, he’s got his eyes on Nvidia, which is generating billions of dollars in quarterly profits for its AI chips. Meta is one of its biggest customers. Jim Fan, a senior AI science at Nvidia, said in a post on X that it likely cost Meta $20 million to train Llama 2, considerably more than the estimated $2.4 million it took to train its predecessor.

Mainstream adoption of Llama 2 could influence Nvidia to ensure its graphics processing units (GPUs) work well with Meta-sanctioned software, lowering the company’s AI training and computing costs.

Meanwhile, Meta has its own internal AI chip projects, giving it a potential alternative to Nvidia’s processors.

“It gives them some price negotiating room,” said Arjun Bansal, CEO of enterprise startup Log10 and a former AI chip executive. “Nvidia wants to charge a lot and they can be like, ‘Hey, we got our own thing.'”

Nvidia President and CEO Jensen Huang speaks at the COMPUTEX forum in Taiwan, May 28, 2023.

Sopa Images | Lightrocket | Getty Images

Nathan Lambert remembers the energy emanating from his colleagues at AI startup Hugging Face the weekend Meta debuted its much-anticipated Llama 2.

Lambert and his teammates worked overtime to ensure the company’s infrastructure was ready to handle the influx of coders looking to take Llama 2 for a test drive.

Along with cloud-computing engines Microsoft Azure and Amazon Web Services, Hugging Face was one of Meta’s chosen launch partners for Llama 2, but arguably the most important. Developers, AI researchers and thousands of companies use Hugging Face’s platform to share code, data sets and models, making it one of the industry’s biggest communities.

Although a number of open source LLMs are available, Lambert said Llama 2 is by far the most popular.

“It’s the model that most people are playing with and that most startups are playing with,” said Lambert, who announced on Oct. 4 that he’s leaving Hugging Face though he didn’t say where he’s going.

As with all things Zuckerberg, the project is not without controversy. Some in the industry consider Meta’s licensing agreement to use Llama 2 as limiting, conflicting with the spirit of collaborative development and innovation.

For instance, third-party developers must request approval from Meta to use Llama 2 if they incorporate the software into any products or services that had “greater than 700 million monthly active users” in the month prior to its July release. Critics have said this clause was a way to keep rivals like Snap or TikTok from using Llama 2 for their own services.

“It’s pretty restrictive,” said Umesh Padval, a venture partner at Thomvest Ventures and investor in AI startup Cohere, which builds proprietary LLMs. “It looks like Meta wants all the benefits of open source for their business while keeping the competition away.”

Lambert said Meta could do itself a favor with the open source community and release more details about the specific, underlying datasets used to train Llama 2 so developers could better understand the training process. Open source adherents and privacy experts have pushed for more transparency into what kinds of data has been used to train LLMs, but companies have so far revealed few details.

“We believe in open innovation, and we do not want to place undue restrictions on how others can use our model,” a Meta spokesperson said in a statement. “However, we do want people to use it responsibly. This is a bespoke commercial license that balances open access to the models with responsibility and protections in place to help address potential misuse.”

Despite some detractors, Meta’s model is seeing plenty of early uptake. The company disclosed at Connect that there have been “more than 30 million downloads of Llama-based models through Hugging Face and over 10 million of these in the last 30 days alone.”

Nvidia’s Fan noted in his X post that Llama 2’s new commercial license could lure more companies to experiment with the language model compared to the original Llama.

“AI researchers from big companies were wary of Llama-1 due to licensing issues, but now I think many of them will jump on the ship and contribute their firepower,” Fan wrote.

As of today, businesses investing in AI prefer to use commercially available LLMs, according to a recent TC Cowen survey of 680 firms in cloud computing. The survey found that 32% of respondents have used or plan to use commercially packaged LLMs like OpenAI’s GPT-4 software while 28% were focused on open source LLMs like Llama and Falcon, developed in the United Arab Emirates. Only 12% of respondents planned on using in-house LLMs.

Meta’s reputational challenge

At the U.S. Government Accountability Office, Taka Ariga studies how bleeding-edge technologies like LLMs could help the agency better conduct audits and investigations through its Innovation Lab.

By the end of the year, Ariga’s team is planning to finish its first experiment investigating how LLMs can potentially be used to summarize numerous GAO reports and materials on a particular topic, and then combine those files with various other potentially relevant documentation from other agencies.

“The general public or a member of congress might say, ‘What has the GAO done in the area of nuclear safety?'” Ariga said, regarding the LLM project. “Of course, we have done a lot of work, but that’s sort of report-by-report basis; you can’t do that kind of sort of topical search.”

The GAO is currently using AWS’ Bedrock generative AI service to help the agency experiment with various popular LLMs, including proprietary models offered by startups like Cohere and Anthropic.

While AWS recently said Bedrock will soon support Llama 2, Ariga said the GAO is first testing Anthropic’s Claude LLM and will likely pass on using Llama 2 because of Meta’s poor reputation in Washington.

Meta has earned the ire of lawmakers over the years due to a host of issues, including data privacy scandals, antitrust investigations and allegations that Facebook censors conservative voices, Ariga noted, likening Zuckerberg to Elon Musk, the CEO of Tesla and owner of X.

“Mark Zuckerberg is, just like Elon, a bit of a lightning rod when it comes to political technology,” Ariga said.

“We know that while AI has brought huge advances to society, it also comes with risk,” Meta’s spokesperson said. “Meta is committed to building responsibly and we are providing a number of resources like our responsible use guide to help those who use Llama 2 do so.”

Even among prospective customers that are unconcerned about reputational issues, Meta has to prove that it has superior LLM technology.

Nur Hamdan, a product manager at AI startup aiXplain, said OpenAI’s GPT-4 is better than Llama 2 at understanding context over long, extended conversations. That means GPT-4 would likely produce conversations in a way that feel more lifelike, Hamadan said.

Tests comparing GPT-4, Llama 2 and other LLMs are becoming routine. In one such test, researchers discovered that GPT-4 was able to generate better software code than Llama 2. Meta has since released a version of Llama 2 specifically for creating code.

Sam Altman, CEO of OpenAI, at an event in Seoul, South Korea, on June 9, 2023.

Bloomberg | Bloomberg | Getty Images

In today’s land grab, Meta is competing against Amazon, Google and heavily funded startups like OpenAI and Cohere. They’re each aiming to be the cornerstone of next-generation apps. Meta sees open source as a key advantage, versus other companies that are selling the technology and packaging it with other services.

“Somebody like Google or Microsoft, they may all be a little bit conflicted there,” said longtime infrastructure technology executive Guido Appenzeller, who held senior roles at VMware and Intel. “Facebook was not and that’s sort of how they move forward and democratizing this, giving sort of broad access to open source. I think it’s something incredibly powerful.”

A Microsoft spokesperson said in an emailed statement that the company will provide customers with options and let them choose what model they prefer, whether it’s “proprietary, open source, or both.”

“Each foundational model has unique benefits and we hope to make it easy for customers to select, fine-tune, and deploy them responsibly to maximize the outcome from these tools,” Microsoft said.

Representatives from Amazon and Google didn’t respond to requests for comment.

Llama’s impact on the technology industry could rival that of Kubernetes, the open source data center infrastructure software that Google released in 2014, experts said. In giving away Kubernetes, Google dramatically impacted the business models of once hot startups like Docker and CoreOS, which Red Hat acquired in 2018.

Meta is deploying a Kubernetes-like strategy with Llama 2, but in a market that’s expected to be much bigger.

“I’m a fan of Facebook, I understand what Mark has done,” Thomvest’s Padval said. “They’re reinventing the company.”

However, open source doesn’t always win, and Padval acknowledged that “in this case, I don’t know how it’s going to evolve.”